Docker

Understanding Virtual Machines and Containers

Virtual machines (VMs) are like computer "slices" created within a single physical server. Imagine having a powerful computer, but instead of running just one operating system and one set of programs, you can divide it into multiple smaller computers, each running its operating system and programs. For example, you could have one VM running Windows and another running Linux on the same server.

Containers, on the other hand, are like individual packages for software applications. They contain everything the application needs to run, such as code, runtime, libraries, and settings. It's like shipping a product in a sealed container—you know everything inside is exactly what's needed to run the application. For instance, you can have a container for a web server, another for a database, and so on.

Enter Containers: Addressing Resource Efficiency

Imagine you have a big server with lots of memory and processing power. With virtual machines, you might divide it into several smaller virtual servers, but each still needs its own operating system, which can be inefficient. For example, if you have a server with 32 GB of RAM and you create four VMs, each with 8 GB of RAM, you're effectively using 32 GB of RAM for four separate operating systems, even if some of them don't need that much.

Containers solve this problem by sharing the host server's operating system. They only use what they need, so you can run many more containers on the same server without wasting resources. Going back to the example, if you have a containerized environment instead, you could run multiple applications on the same server, each in its own container, and they would all share the server's resources more efficiently.

Navigating Drawbacks and Enhancing Security

While containers offer great resource efficiency, they come with some security concerns. Since they share the host operating system, there's a risk that one container could compromise the others or even the host itself. For example, if a malicious program gets into one container, it might be able to access other containers or the host system.

Virtual machines, on the other hand, have stronger isolation because each VM has its own operating system. Even if one VM is compromised, the others are still safe. However, VMs are heavier and slower to start compared to containers.

To address container security concerns, various security measures like namespace isolation and control groups (cgroups) are implemented. These technologies help create boundaries between containers and ensure they can't interfere with each other or the host system.

Even if all 100 containers are not actively running at the same time, they still consume resources on the host operating system. Efficient resource management and scheduling strategies are crucial for optimizing resource utilization in containerized environments.

Architecture of Containers:

Setting up containers directly on physical servers can be tough due to maintenance requirements. It's more common to run containers on virtual machines (VMs) because VMs handle tasks like hardware and software updates automatically. Containers, being lightweight, don't have their operating system and use resources from the host. This makes them smaller in size and easier to transfer compared to VM snapshots.

Advantages of Containers:

Lightweight nature reduces resource consumption.

Smaller container size facilitates easier transfer and deployment.

Allows for flexibility in installing different applications on each container.

Why Containers are lightweight in nature?

Containers are lightweight because they don't carry around everything they need to run like a virtual machine does. They use the operating system that's already on the computer they're running on, which means they only need to bring the essentials: the stuff that makes your specific program work. This makes containers much smaller and faster to start up compared to virtual machines. So, they're like streamlined versions of virtual machines that get the job done without all the extra baggage. We can take the example of any OS container image compared to actual size of OS.

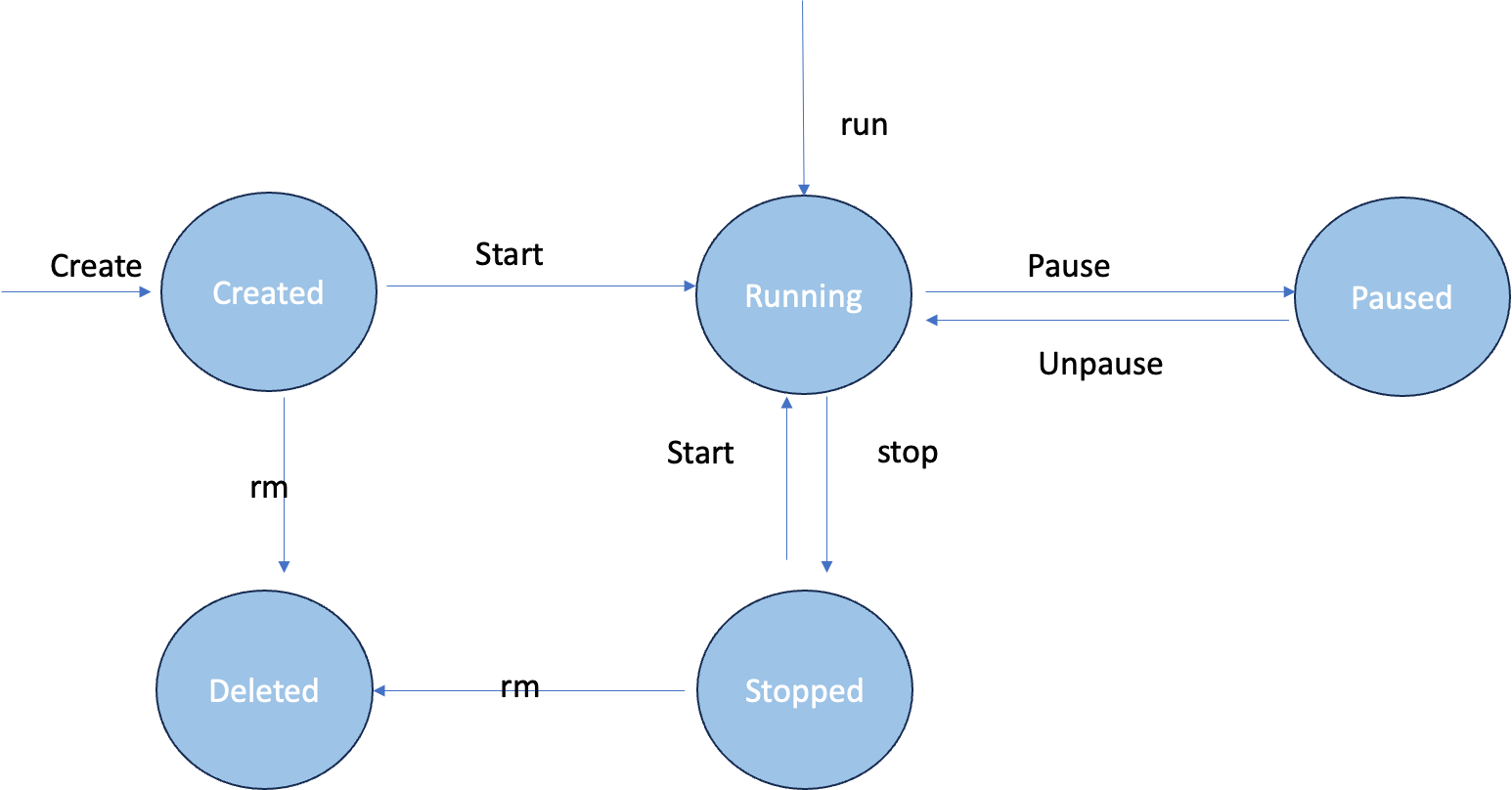

Lifecycle of Docker:

Create Dockerfile: Begin by crafting a Dockerfile, a text file containing instructions on how to build your Docker image.

Build Docker Image: Utilize the Dockerfile to build your Docker image using the

docker buildcommand. This process compiles all the necessary dependencies and configurations into a single package.Run Docker Container: Once the image is built, you can create and run Docker containers based on that image using the

docker runcommand. Containers are the actual instances of your application running in isolation, each with its own environment and resources.

What is Docker?

"Docker is an open platform designed for developing, shipping, and running applications. It helps developers build, send, and run their apps smoothly. With Docker, you can keep your apps separate from the computer stuff they need to work. This makes it easier to send out new software fast. Plus, Docker lets you manage everything, from your apps to the computer bits, using the same simple methods. By using Docker's tricks for sending out code quickly and safely, you can get your software running in no time."

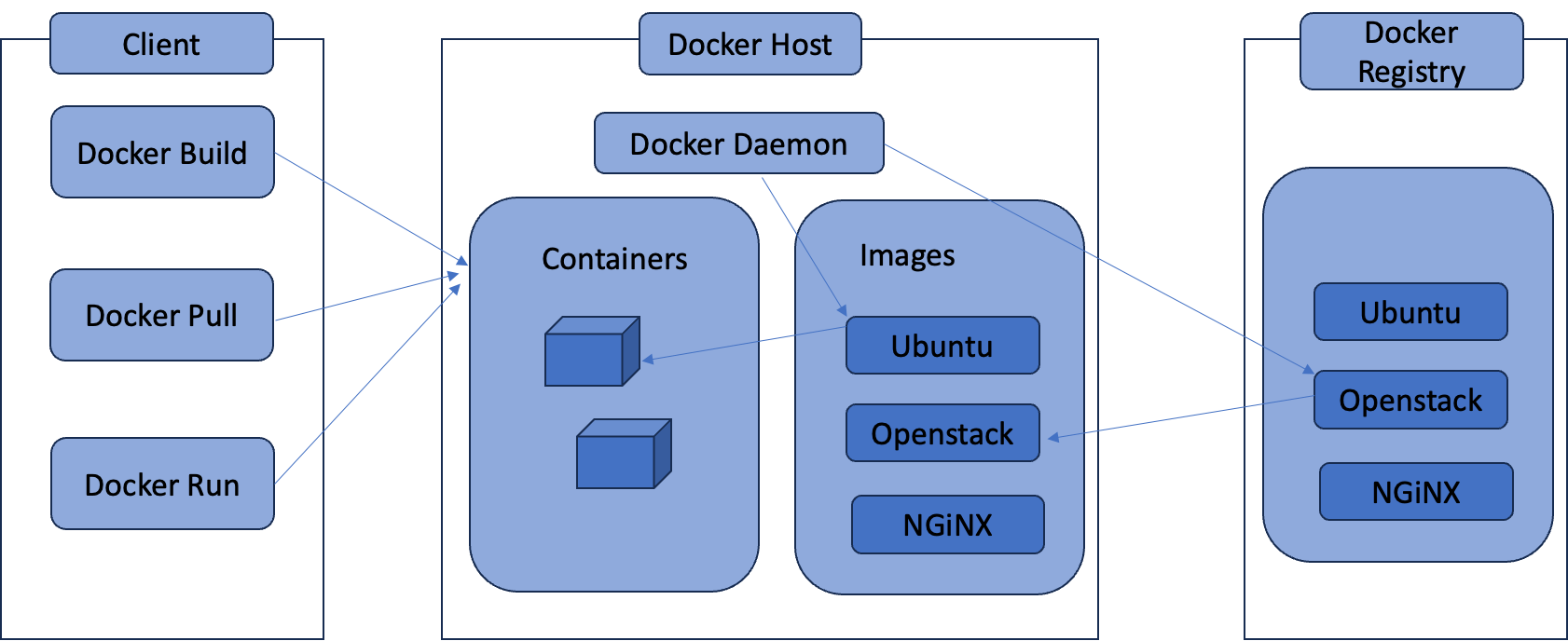

Docker Architecture

"Docker works like a team with two main players: the client and the daemon. The client gives instructions, while the daemon does the hard work of managing containers. They can work together on the same system, or the client can talk to a daemon on a different machine. They communicate through a special language called REST API, using either a direct connection or over a network. Another useful tool is Docker Compose, which helps handle groups of containers, making managing applications easier."

Docker mainly has 3 components:

Docker Host

Docker Host managed Docker Daemon, images, and containers. The Docker host provides the underlying infrastructure and resources necessary for creating, running, and managing containers. It can be a physical machine, a virtual machine, or a cloud instance. The Docker host typically runs the Docker engine (daemon) and interacts with the Docker client to perform various tasks such as creating, starting, stopping, and removing containers, as well as managing images and networks.

Docker Client

Docker Client interacts the user with docker daemon(dockerd). When you run commands like 'docker run', the client sends them to 'dockerd', which executes them. The 'docker' command utilizes the Docker API, and it's capable of communicating with multiple daemons.

Docker Registry

This is the hub where Docker images are stored. Docker Hub is a public registry accessible to everyone, and Docker automatically searches for images on Docker Hub unless specified otherwise. Docker hub also provides the capability to set up your own private registry. Each Docker Pull and push goes to the public registry.

Conclusion:

To conclude today's blog, we explained that containers are like compact packages for software, efficiently using resources by sharing the host's operating system. While they're lightweight and quick to start, security concerns are addressed through measures like namespace isolation. The blog also covers Docker's key components—Docker Host, Client, and Registry—clarifying how Docker simplifies app development and deployment. Overall, it's a helpful guide for anyone looking to grasp the basics of Docker and containers, offering practical insights into their benefits and operational mechanisms.